As Machine Learning and AI rapidly grow within the IT industry and the car industry, financial or medical industry, VMware collaborated with NVIDIA to increase the productivity of vSphere and bring AI to the data center. So now we have the NVIDIA AI Enterprise suite as software certified on vSphere, enabling technologies and software from NVIDIA for rapid deployment, management, and scaling of AI workloads in the modern hybrid cloud.

In this post, we will configure NVIDIA Ampere A100 GPU the new Multi-Instance GPU (MIG) software functionality. Multi-instance GPUs are a new feature in the vGPU drivers that further enhances the vGPU approach to sharing physical GPUs by providing more physical isolation of the share of the GPU’s compute power and memory assigned to one VM from others. On a vSphere 7 with NVIDIA A100 you can share a single GPU across 7 VMs, migrate MIG instances to other A100 hosts using vMotion and use DRS to balance MIG workloads for optimal performance, spread loads across available GPU, and avoid performance bottlenecks.

I’ll be using NVIDIA A100 PCIe 80GB on DellEMC PowerEdge R750 vSAN Ready Node and vSphere 7 with vSAN.

Prerequisites

NVIDIA License

For using A100 PCIe 80GB GPU an NVIDIA Enterprise AI license is needed (it will be listed as NVAIE_Licensing-1.0 under your entitlements in NVIDIA licensing portal). It is not possible to use GRID vGPU with this GPU. It also only supports Linux OS and can only be used for computing (graphics not supported).

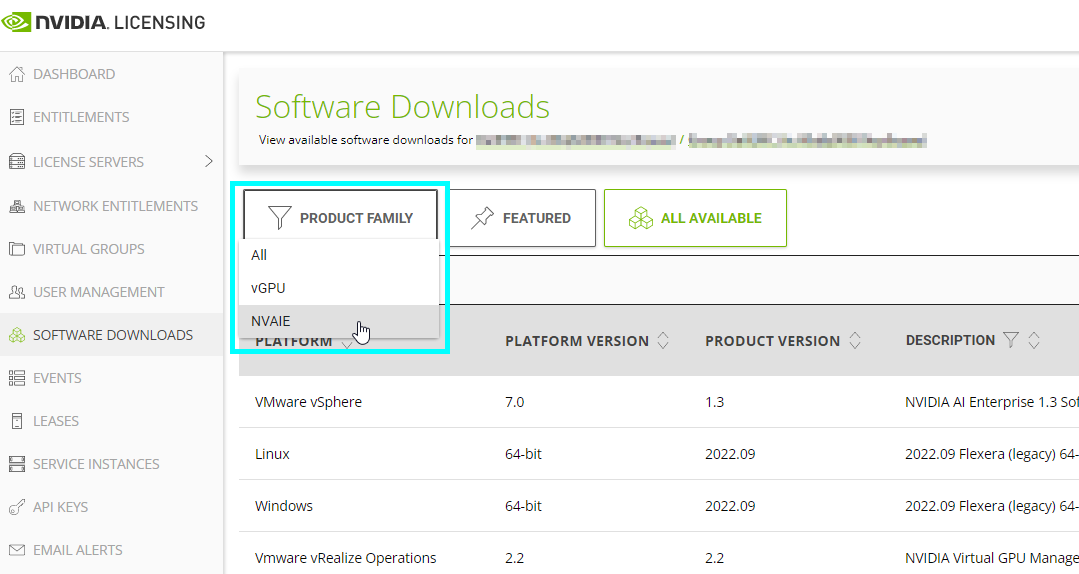

1. Login to NLP – Software Downloads (nvidia.com) and choose the NVAIE in “Product Family”:

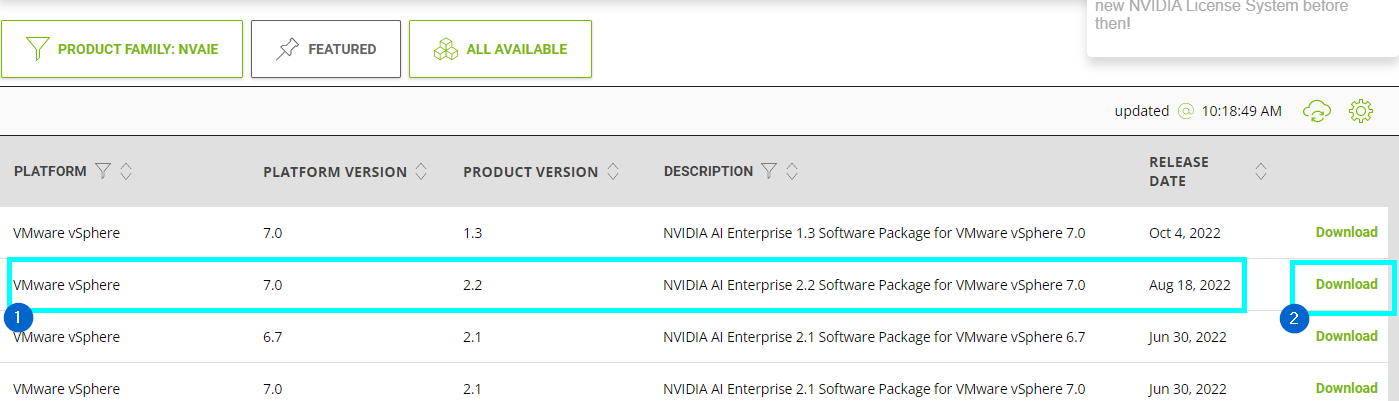

2. Choose your platform version and click Download:

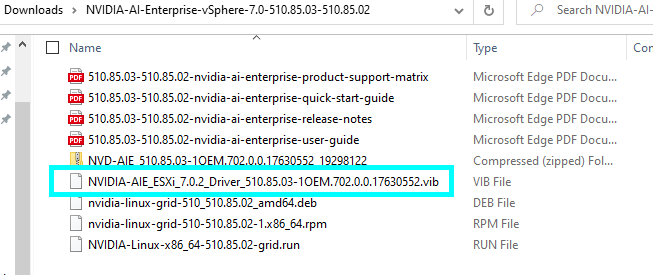

3. You can find ESXi driver within the ZIP:

Hardware settings

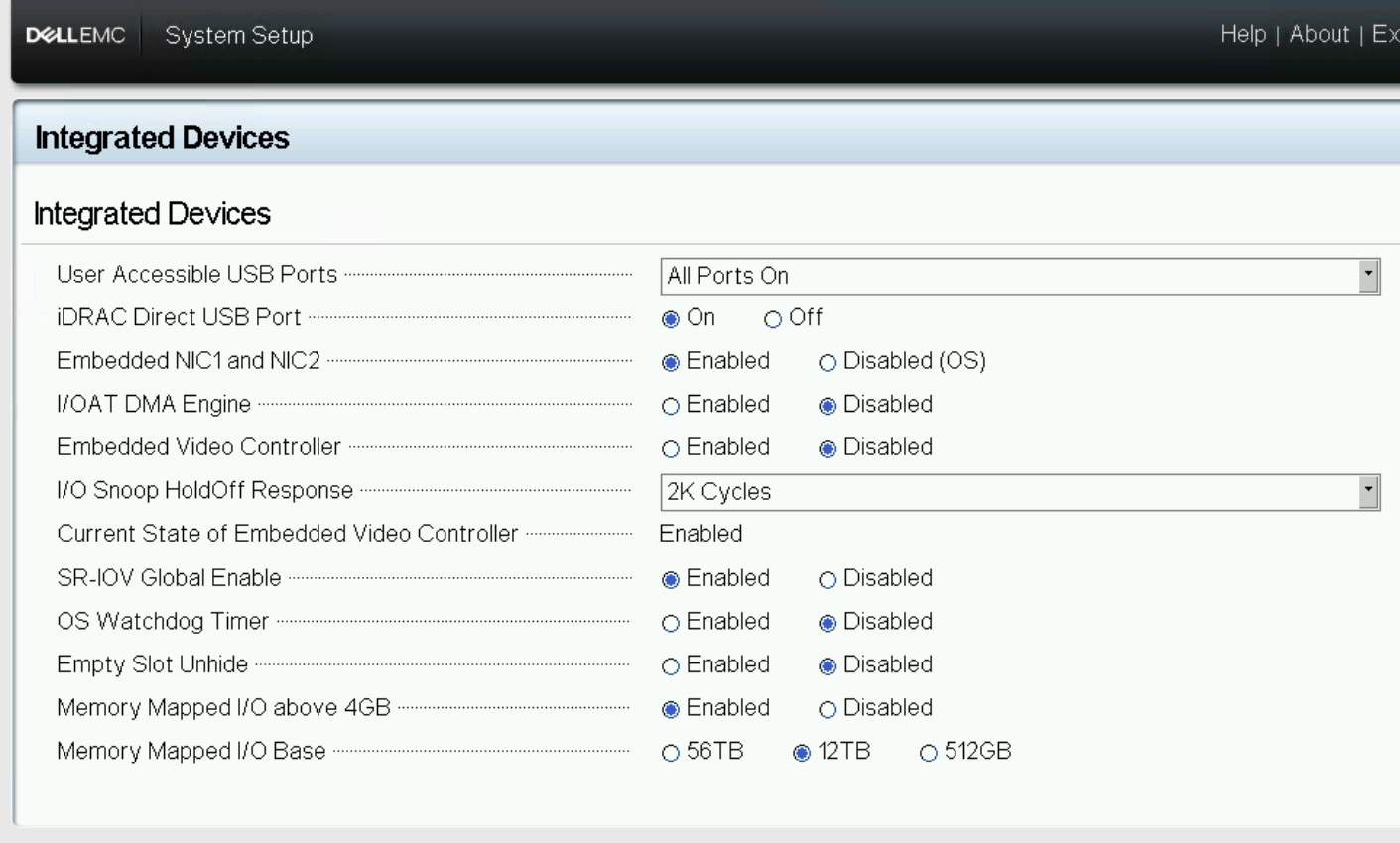

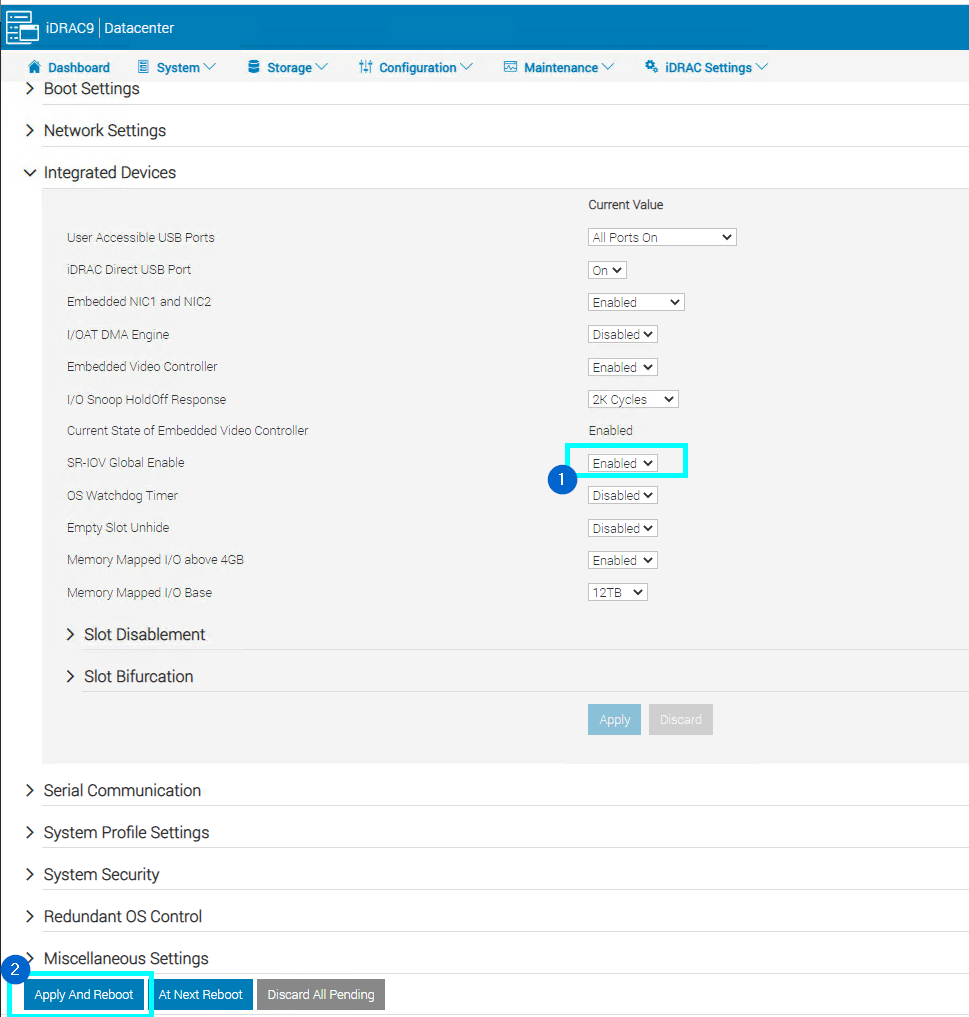

Enable the “Global SR-IOV” feature at the vSphere host BIOS level through the server management console (in my case iDRAC). SR-IOV is a pre-requisite for running vGPUs on MIG. SR-IOV is not a pre-requisite for MIG in itself, SR-IOV and the vGPU driver are sort of wrappers around a GPU Instance. A GPU Instance is associated with an SR-IOV Virtual Function at VM/vGPU boot-up time.

1. You can change it in BIOS enabling it within System Setup Main Menu > System BIOS >System BIOS Settings > Integrated Devices > SR-IOV Global Enable > Enabled

or you can use iDRAC to enable it and after enabling it apply the configuration by clicking Apply and Reboot

vSphere Settings

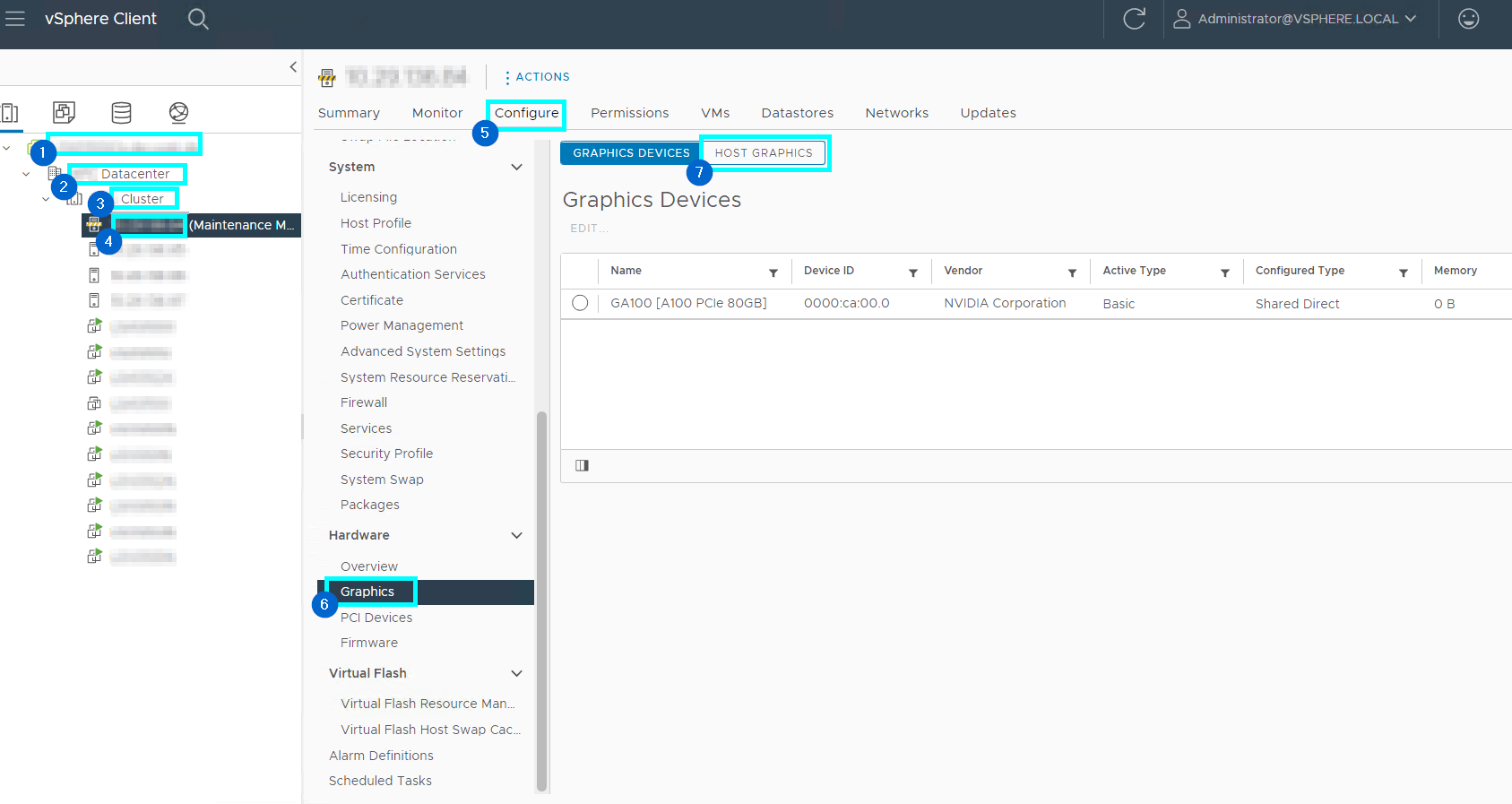

A GPU card can be configured in one of two modes: vSGA (shared virtual graphics) and vGPU. The NVIDIA card should be configured with vGPU mode. This is specifically for use of the GPU in compute workloads, such as in machine learning or high-performance computing applications.

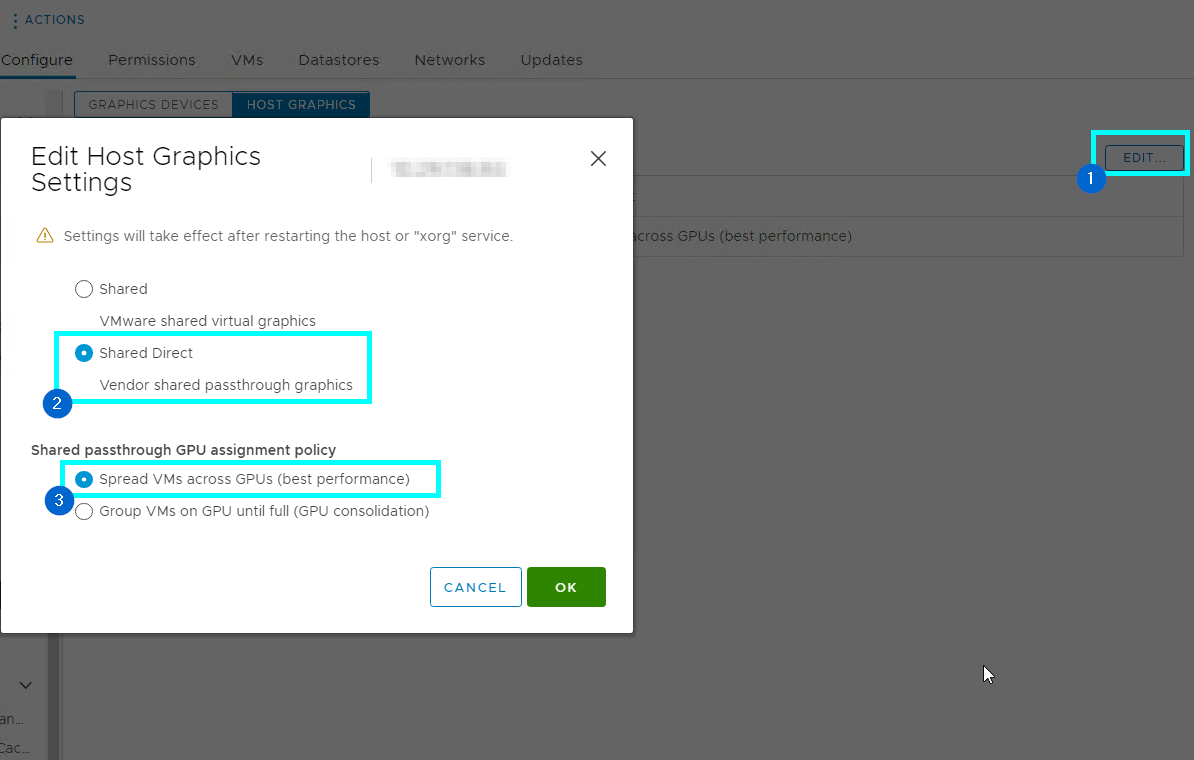

1. Login to vSphere Client and navigate to vCenter > Datacenter > Cluster > Host > Configure > Graphics and click on Host Graphics

2. Under the Host Graphics click Edit and choose Shared Direct and Spread VMs across GPUs. Reboot the host after you changed the settings.

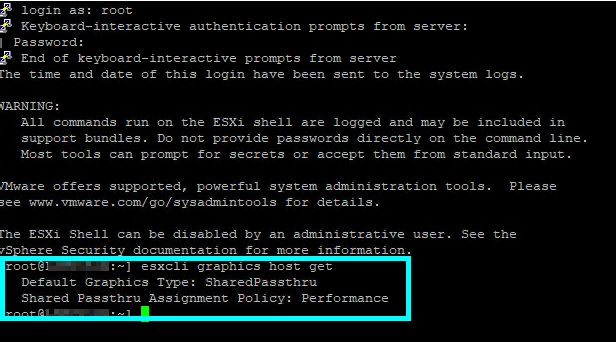

or access the ESXi host server either using the ESXi shell or through SSH and execute the following command:

esxcli graphics host set –-default-type SharedPassthru

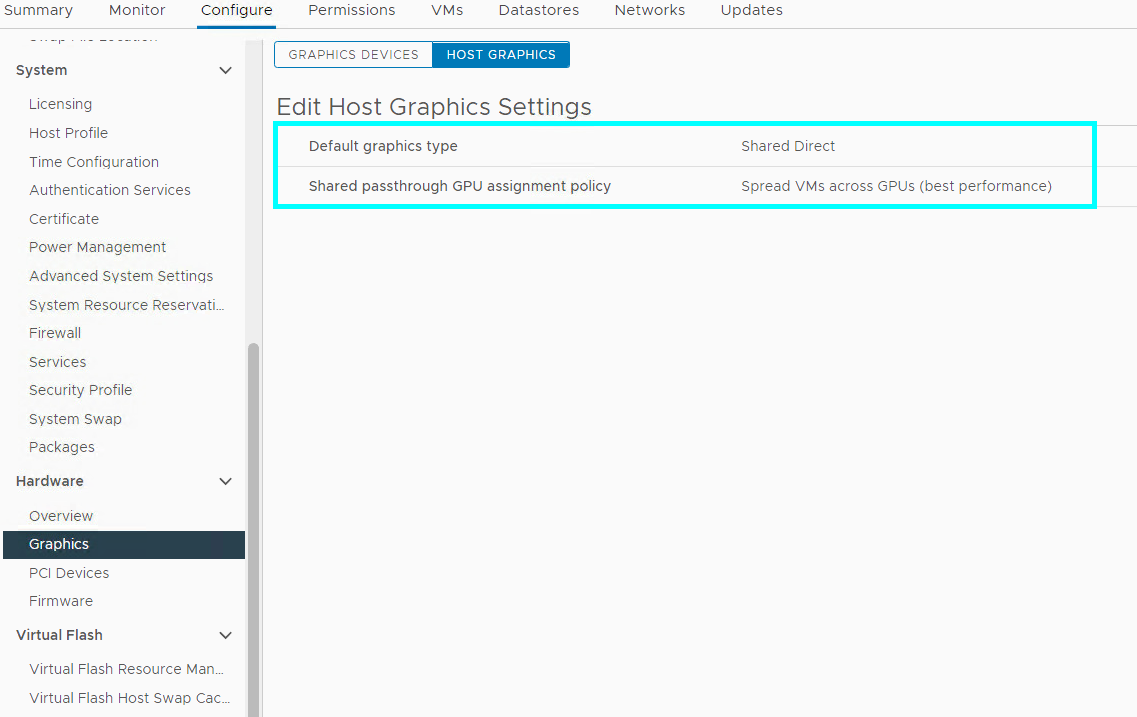

3. Check the settings. The settings should appear as:

or check that the settings have taken using the command line by typing the following command:

esxcli graphics host get

This command should produce the output as follows:

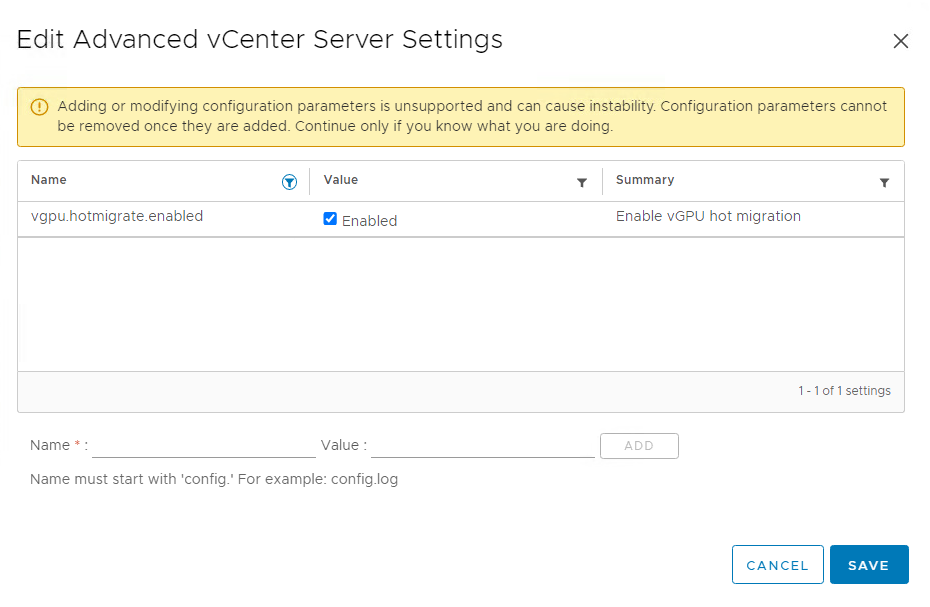

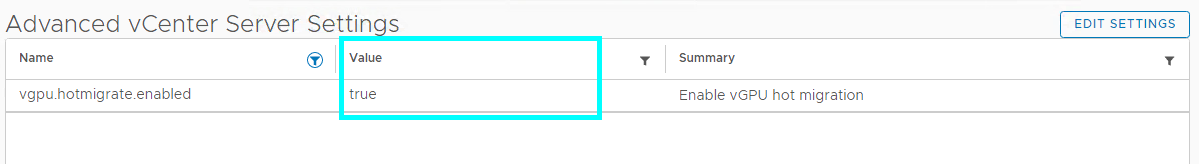

4. To enable VMware vMotion with vGPU, an advanced vCenter Server setting must be enabled. However, suspend-resume for VMs that are configured with vGPU is enabled by default. Select the vCenter Server instance and click on the Configure tab. In the Settings section, select Advanced Settings and click Edit. In the Edit Advanced vCenter Server Settings window that opens, type vGPU in the search field.

When the vgpu.hotmigrate.enabled setting appears, set the Enabled option, and click OK.

You can check if the setting successfully enabled is:

Install the NVIDIA vGPU Manager VIB on ESXi

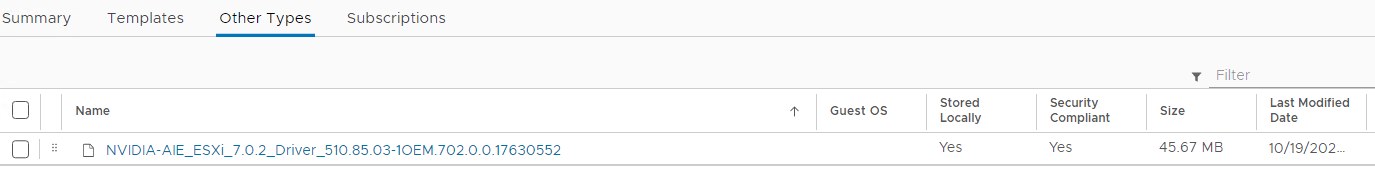

1. Upload the .VIB file to the ESXi datastore (Local or vSAN) using WinSCP or scp command. You can also upload it to the Content Library you previously configured:

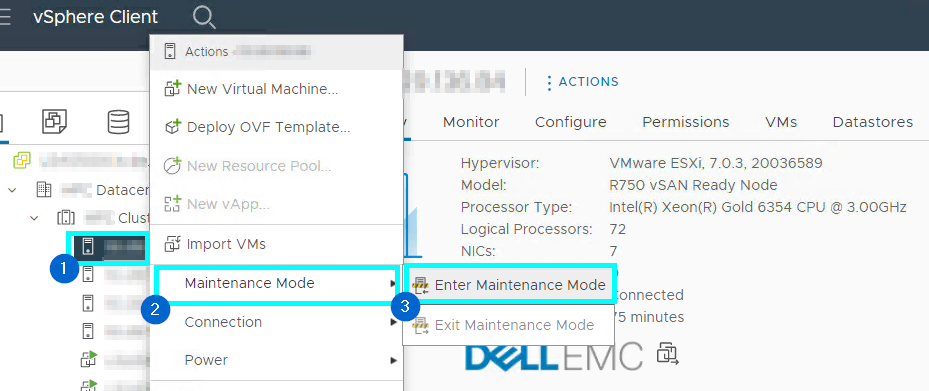

2. Before proceeding with the vGPU Manager installation, make sure that all VMs are either powered off, moved away, or quiesced and place the ESXi host in Maintenance Mode.

you can place the host into Maintenance mode using the command prompt by entering:

esxcli system maintenanceMode set --enable=true

This command will not return a response.

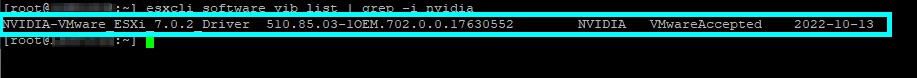

3. After placing the host into maintenance mode, use the following command to check if different NVIDIA VIB already installed is:

esxcli software vib list | grep -i nvidia

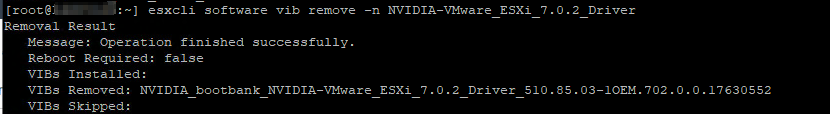

4. If older or GRID VIB is installed, it has to be removed with following command:

esxcli software vib remove -n NVIDIA-VMware_ESXi_7.0.2_Driver

5. Although the remove commands output says Reboot Required: false the ESXi needs a reboot:

6. Reboot the ESXi host from the vSphere Web Client by right-clicking the host and selecting Reboot or reboot the host by entering the following at the command prompt:

reboot

This command will not return a response. If you use a command there is no need to enter a descriptive reason for the reboot in the Log a reason for this reboot operation field

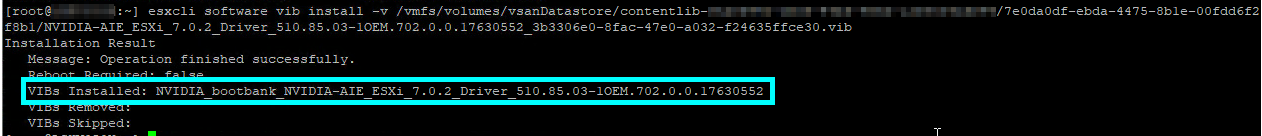

7. Access the ESXi host via the ESXi Shell or SSH again and install the package with the following command:

esxcli software vib install -v directory/NVIDIA-AIE_ESXi_7.0.2_Driver_510.85.03-1OEM.702.0.0.17630552_3b3306e0-8fac-47e0-a032-f24635ffce30.vib

in my case VIB resides on a vSAN datastore within Content Library folder:

esxcli software vib install -v /vmfs/volumes/vsanDatastore/contentlib-xxxxxxxxxxxxxxxxxxxxxxxxx/7e0da0df-ebda-4475-8b1e-00fdd6f2f8b1/NVIDIA-AIE_ESXi_7.0.2_Driver_510.85.03-1OEM.702.0.0.17630552_3b3306e0-8fac-47e0-a032-f24635ffce30.vib

The directory is the absolute path to the directory that contains the VIB file. You must specify the absolute path even if the VIB file is in the current working directory.

8. Reboot the ESXi host again. Although the display states “Reboot Required: false,” a reboot is necessary for the vib to load and Xorg to start.

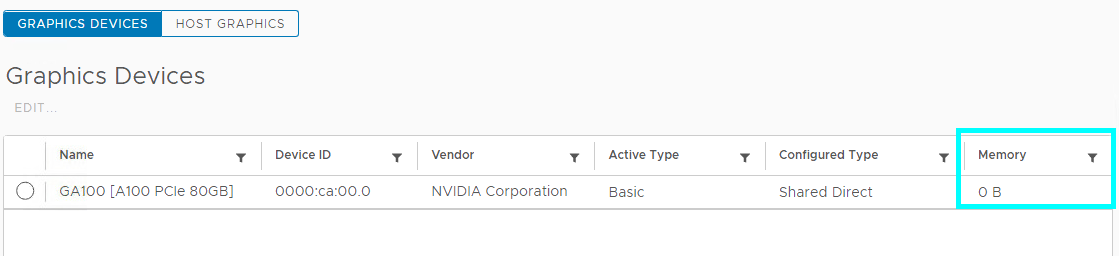

9. Previously was our GPU presented under the Host Graphics with Memory 0 B

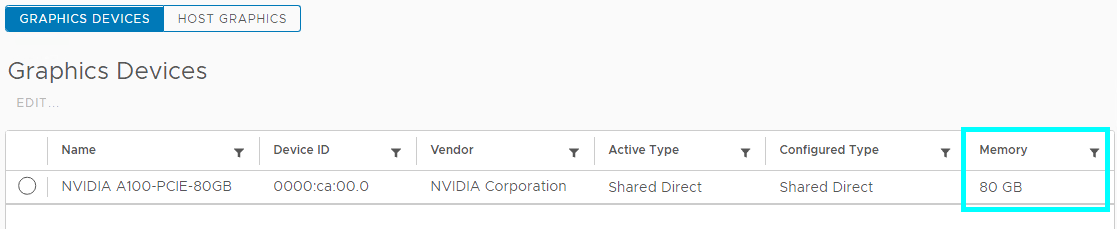

and now it says Memory 80 GB

10. After the ESXi host has rebooted, verify the installation of the NVIDIA vGPU software package. First, check the installed VIB with the following command:

esxcli software vib list | grep NVIDIA

11. Verify that the NVIDIA EAI software package is installed and loaded correctly by checking for the NVIDIA kernel driver in the list of kernels-loaded modules with this command:

vmkload_mod -l | grep nvidia

![]()

If the NVIDIA driver is not listed in the output, check dmesg for any load-time errors reported by the driver.

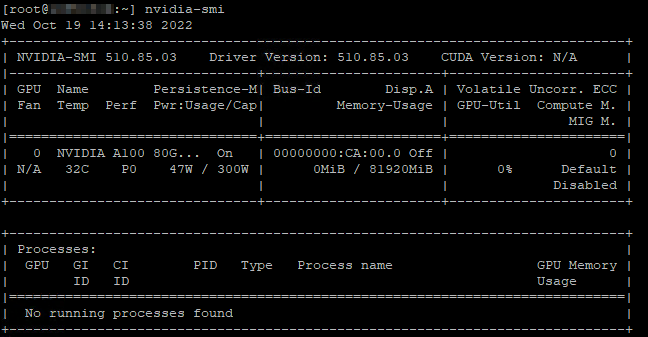

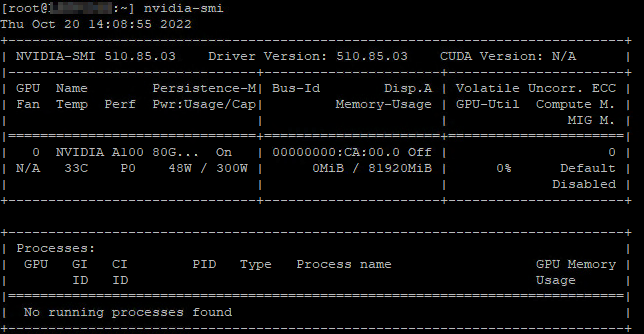

12. Verify that the NVIDIA kernel driver can successfully communicate with the NVIDIA physical GPUs in your system by running the nvidia-smi command.

nvidia-smi

The nvidia-smi command is described in more detail in NVIDIA System Management Interface nvidia-smi.

Running the nvidia-smi command should produce a listing of the GPUs in your platform.

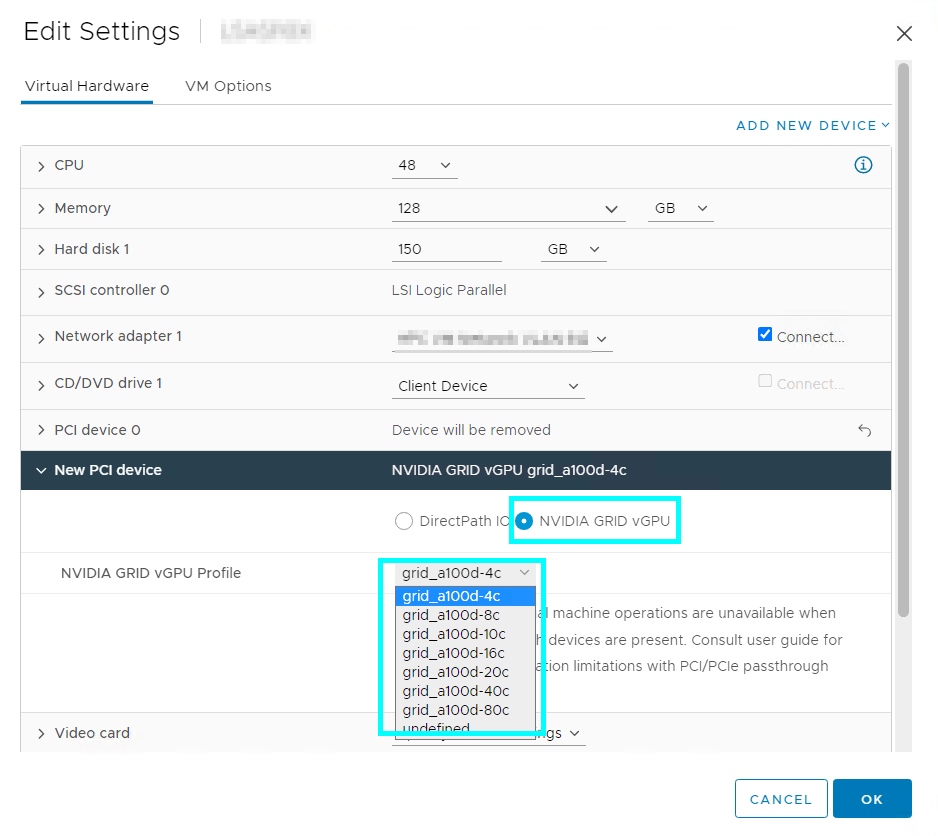

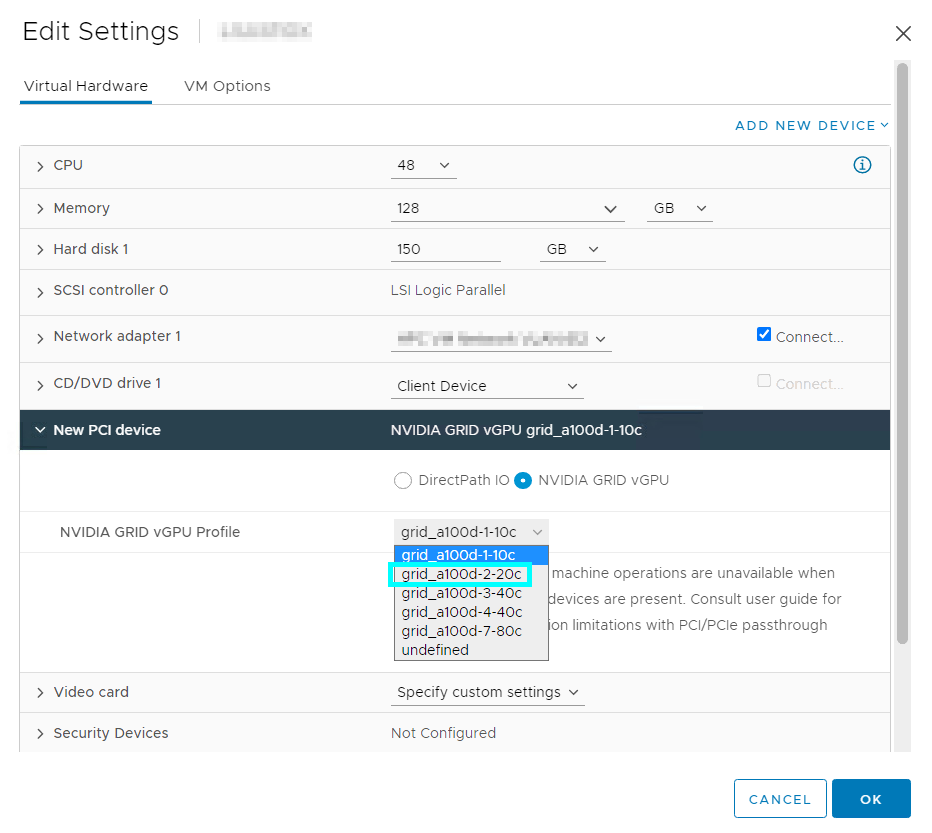

Time-sliced NVIDIA GRID vGPU mode

When choosing the type of vGPU support you will allow a VM to have, you use the NVIDIA GRID vGPU Profile choices in the vSphere Client. Here is that view of the set of vGPU profiles available on an A100-equipped host for the time-sliced vGPU drivers. The number before the final “c” in the NVIDIA vGPU profile name is the number of GB of frame buffer memory (i.e. memory on the GPU itself) that this profile will assign to a VM. Here, we are choosing to have 4 GB assigned to our VM.

The traditional time-sliced NVIDIA vGPU mode does not provide strict hardware-level isolation between VMs that share a GPU. It schedules jobs onto collections of cores called streaming multiprocessors (SMs) according to user-accessible algorithms such as fair-share, equal share or best effort. All cores on the GPU are subject to being used in time-sliced NVIDIA vGPU, as well as all the hardware pathways through the cache and cross-bars to the GPU’s frame buffer memory.

You can differentiate the time-sliced NVIDIA vGPU mode profiles from the MIG mode profiles if you check their names. The time-sliced are shown as grid_a100d-4c for example while MIG mode profiles number immediately preceding the final “c” (for “Compute”) in the profile name indicates the amount of framebuffer memory in GB that will be allocated by that profile to the VM.

Configure MIG

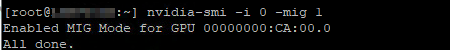

1. To enable MIG on a particular GPU on the ESXi host server, issue the following command:

nvidia-smi -i 0 -mig 1

where -i refers to the physical GPU ID, since there may be more than one physical GPU on a machine. If no GPU ID is specified, then MIG mode is applied to all the GPUs on the system.

2. Continue with a second command as follows:

nvidia-smi –gpu-reset

That second command resets the GPU only if there are no processes running that currently use the GPU. If an error message appears from the nvidia-smi –gpu-reset command indicating that there are running processes using the GPU, then just reboot the server. Try to check with any owners of running processes or VMs first before doing that.

3. After the reboot, when the host is back up to maintenance mode, issue the command:

nvidia-smi

and you should see that MIG is now enabled:

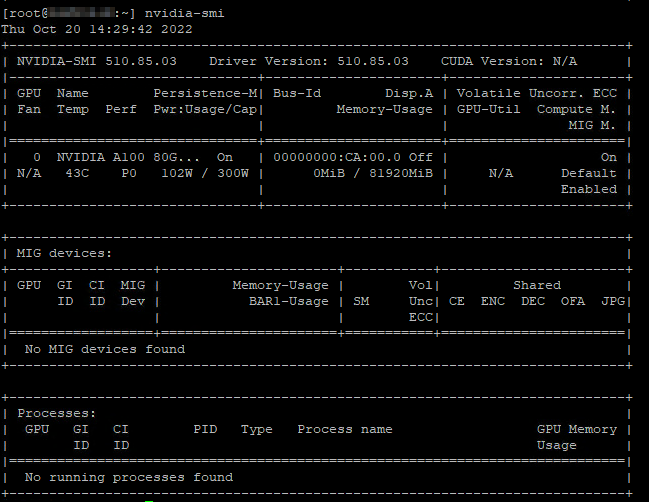

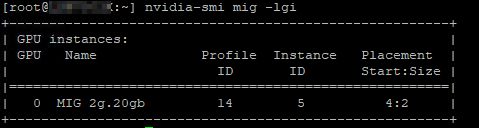

4. To check the set of allowed GPU instance profiles, use the following command

nvidia-smi mig -lgip

At this point, there are no GPU Instances created, so “Instances Free” is zero for each profile.

5. Now create a GPU instance using the command:

nvidia-smi mig -i 0 -cgi 14

in my case, I chose GPU ID zero (-i) on which I created a GPU Instance using profile ID 4 (the MIG 2g.20gb). This GPU instance occupies 20 Gb of frame-buffer memory.

![]()

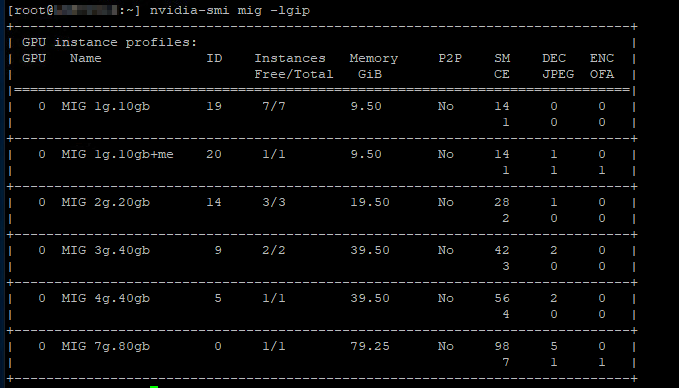

6. To list the new GPU instance use:

nvidia-smi mig -lgi

7. Now create one or more Compute Instances within a GPU instance. First list the available Compute Instance Profiles:

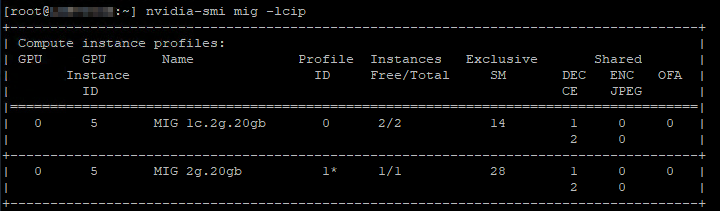

nvidia-smi mig -lcip

Notice that the Compute Instance profiles shown are compatible with the GPU Instances that we created – they have the same “2g.20gb” in their names.

8. Now we pick an existing GPU Instance with ID=1, and create the Compute Instance on it

nvidia-smi mig -gi 5 -cci 1

![]()

where -cci 1 in these examples refers to the numbered Compute Instance Profile ID that we are applying. The asterisk by the Compute Instance Profile ID indicates that this profile occupies the full GPU Instance and it is the default one.

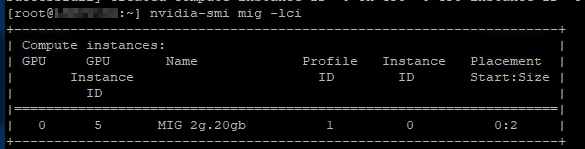

9. You can see the new Compute instance:

nvidia-smi mig -lci

10. Now assign the vGPU profile to the VM within the vSphere Client. That vGPU profile choice associates the GPU Instance we created with the VM – and enables it to use that particular slice of the GPU hardware. In “Edit Settings” choose “Add New Device”, choose the NVIDIA GRID vGPU PCI device type and pick the profile. In our example, because we created our GPU Instances with the “MIG 2g.20gb” profile, then the applicable vGPU profile for the VM from the list above is the “grid_a100-2-20c” one, as that has a direct mapping to the GPU Instance type. Following this vGPU profile assignment, the association between the GPU Instance and an SR-IOV Virtual Function (VF) occurs at the VM guest OS boot time, when the vGPU is also started.

Delete instances and disable MIG

1. First remove any compute instances with the following command:

nvidia-smi mig -gi 5 -ci 0 -dci

![]()

if you try to delete a GPU instance without first deleting the compute instances you will get error message:

Unable to destroy GPU instance ID 5 from GPU 0: In use by another client Failed to destroy GPU instances: In use by another client

2. Remove the GPU instance:

nvidia-smi mig -gi 5 -dgi

![]()

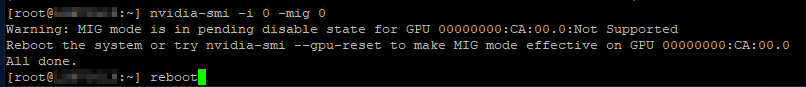

3. To disable MIG on the GPU 0 use the following command.

nvidia-smi -i 0 -mig 0

4. After the reboot, when the host is back up to maintenance mode, issue the command:

nvidia-smi

and you should see that MIG is now disabled:

After configuring everything correctly you can choose the vGPU Profile for the Virtual Machine, install the vGPU driver for the VM guest operating system and apply the license.